Tyler Griggs

I am a third year PhD student in Computer Science in the UC Berkeley Sky Computing Lab advised by Ion Stoica and Matei Zaharia, and supported by the Amazon AI PhD Fellowship. Previously, I worked in Network Infrastructure at Google Cloud. Before that, I graduated from Harvard with a BA in Computer Science advised by James Mickens.

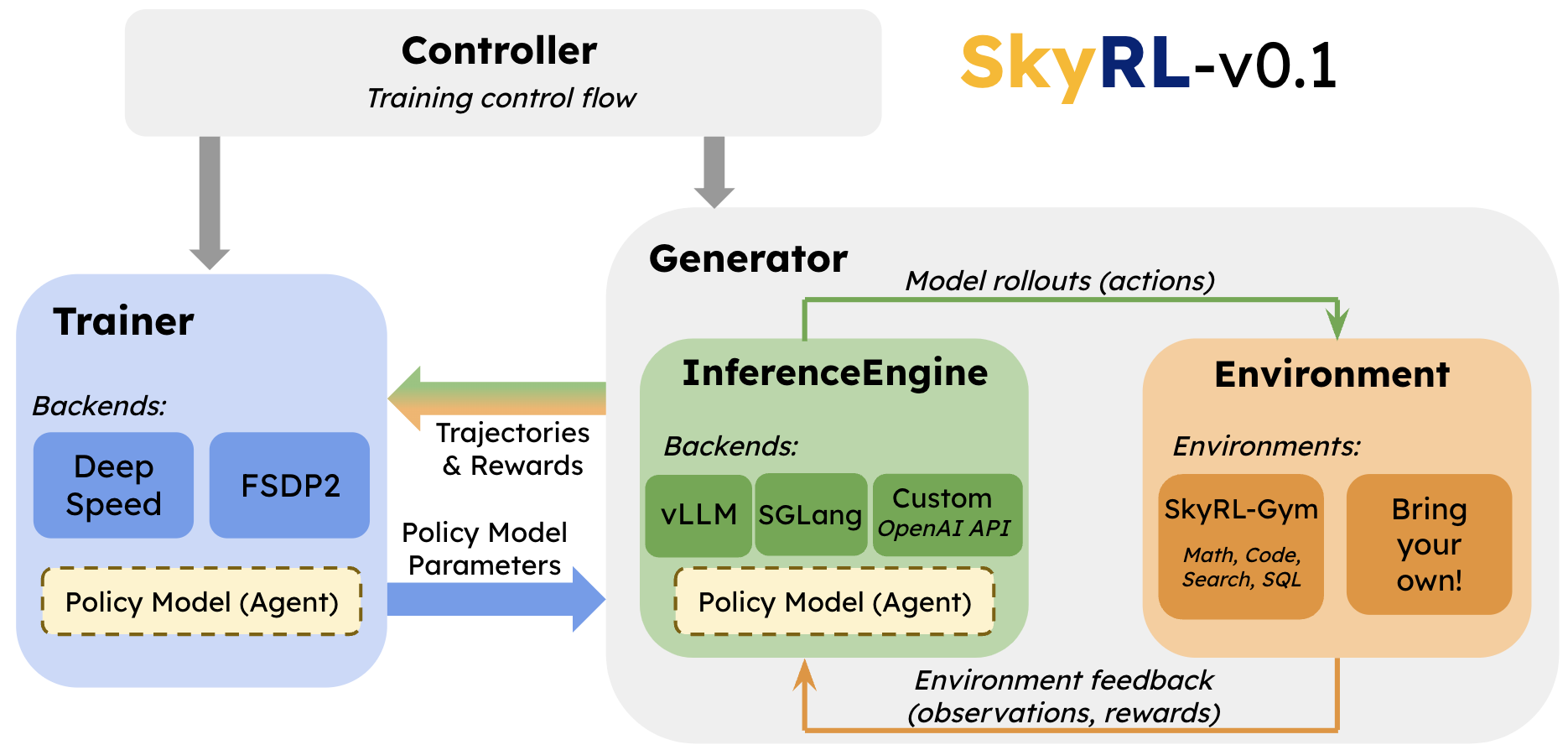

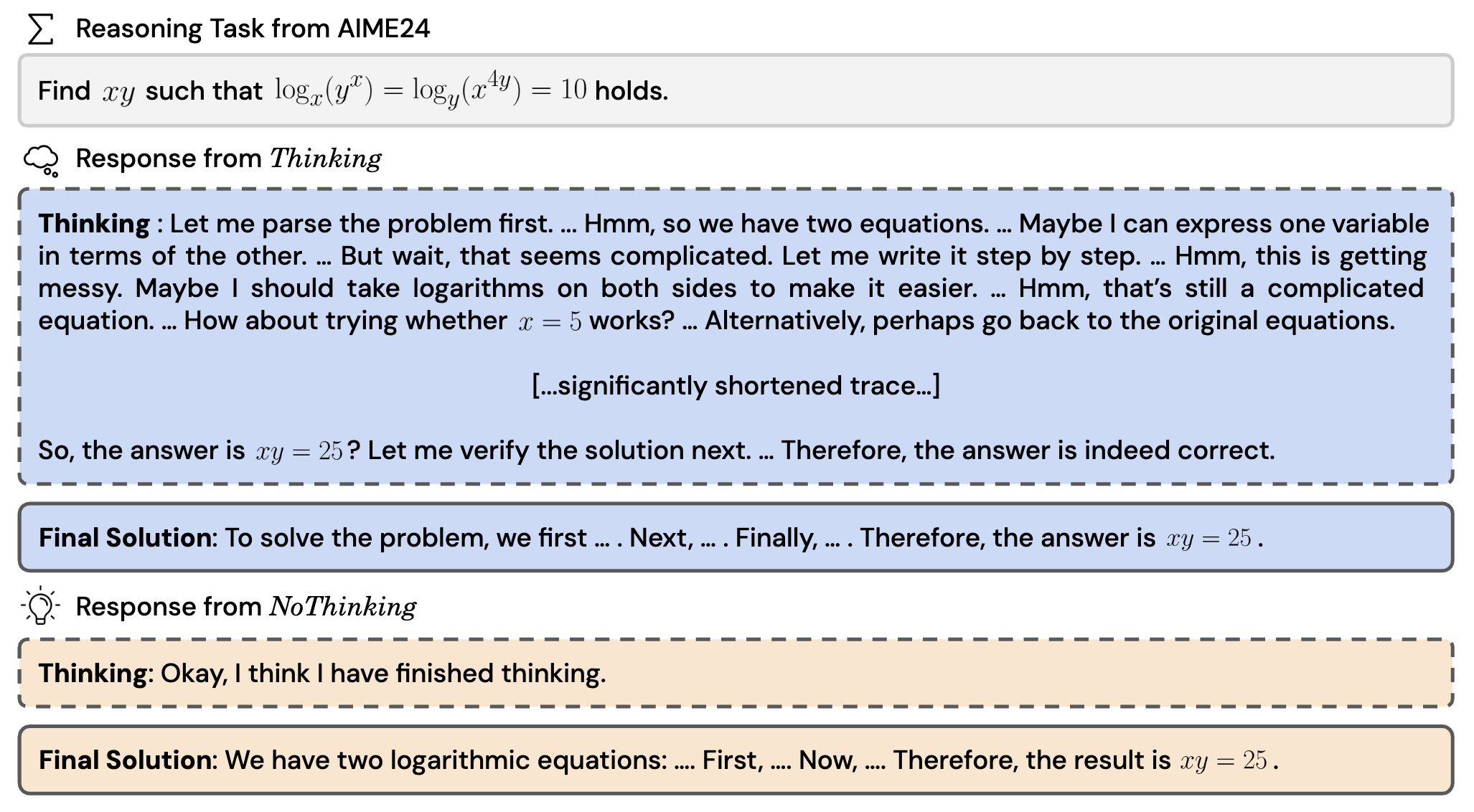

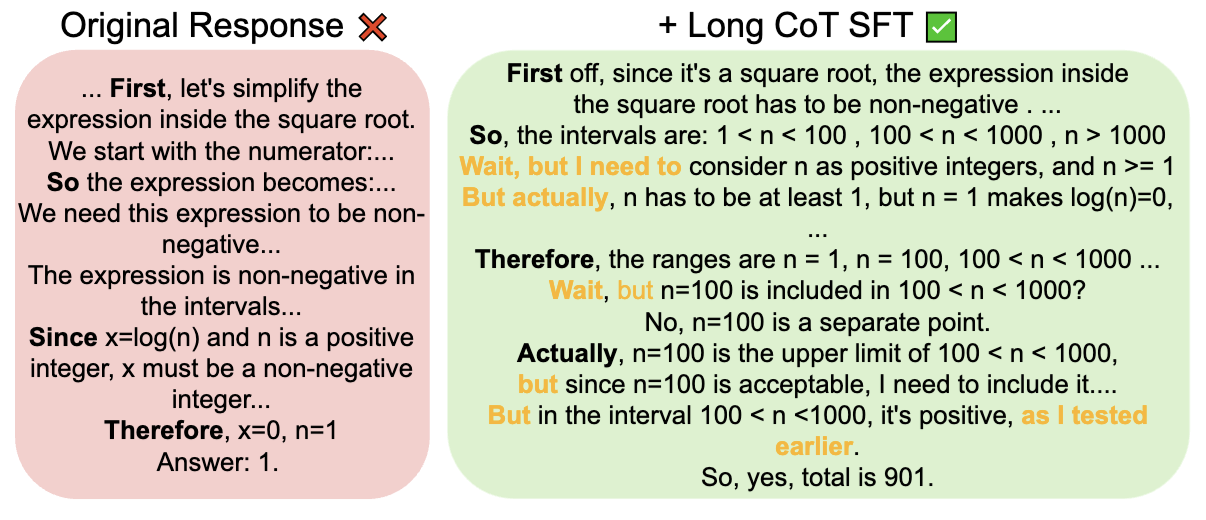

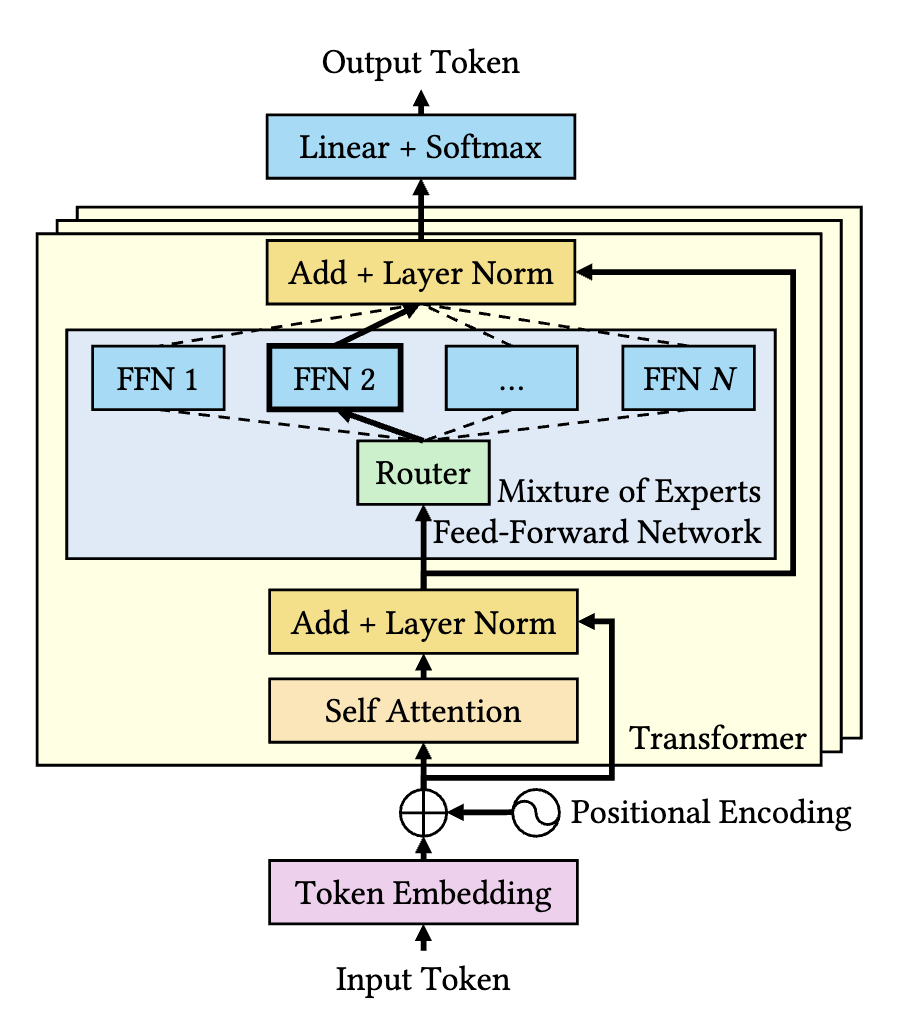

My research interests are in designing and building flexible and efficient systems for model training and inference, and leveraging these systems to develop models useful in real-world tasks. My current research focuses on systems for post-training, especially reinforcement learning. Along with several wonderful collaborators, I am a co-lead of the NovaSky team and building SkyRL. Our work has been featured in The New York Times, The Wall Street Journal, and The Information.